Is Asia prepared to manage disinformation efforts in the context of an election year and deepfakes?

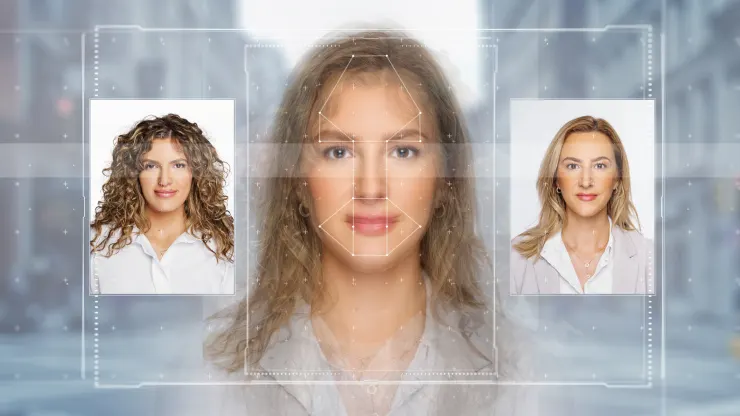

The largest-ever global election year is predicted to occur in 2024. It is congruent with deepfakes' fast increase. From 2022 to 2023, the number of deepfakes increased by 1530% in APAC alone, a Sumsub research claims.

A video featuring the late president of Indonesia, Suharto, endorsing the political party he previously led went viral ahead of the country’s elections on February 14.

On X alone, the deepfake video created by AI that mimicked his voice and appearance received 4.7 million views. This was not an isolated instance.

Around the time of the national elections in Pakistan, a deepfake of the former prime minister Imran Khan surfaced, declaring his party would be boycotting them. In the meantime, voters in New Hampshire heard President Joe Biden implore them not to cast their ballots in the presidential primary in a deepfake.

Deepfakes of politicians are becoming more widespread, particularly since 2024 is predicted to be the most significant election year ever for worldwide elections.

Deepfakes are a major worry because this year, at least 60 countries and over four billion people are expected to cast ballots for representatives and leaders.

A Sumsub research from November stated that between 2022 and 2023, there were ten times as many deepfakes worldwide. During the same era, deepfakes increased by 1,530% alone in APAC.

With a 274% increase in identity fraud between 2021 and 2023, online media—including social media and digital advertising—saw the largest increase in identity fraud. Identity fraud also affected the transportation, healthcare, professional services, and video game industries.

With so many elections this year, nation-state actors, particularly those from China, Russia, and Iran, are expected to launch misinformation or disinformation campaigns to cause havoc, according to cybersecurity firm Crowdstrike’s 2024 Global Threat Report.

Political parties tinkering on the fringes is probably not as significant as more severe interventions, such as when a large power decides it wants to rig an election in a nation, according to Chesterman.

Still, he stated, the majority of deepfakes will be produced by actors in the corresponding nations.

According to Carol Soon, principal research fellow and head of the department of society and culture at the Institute of Policy Studies in Singapore, domestic actors could be political rivals, opposition groups, or radical left- or right-wingers.

Deepfake dangers

Deepfakes, according to Soon, at the very least contaminate the information ecosystem, making it more difficult for individuals to locate reliable information or develop well-informed judgments on a party or candidate.

According to Chesterman, voters may also be turned off by a certain candidate if they come across content regarding a contentious issue that goes viral before it is shown to be false. “The worry is that before there’s time to put the genie back in the bottle, falsehoods propagated online will have spread, even if numerous countries have means to stop them.”

He added that regulations are frequently insufficient and difficult to implement, saying, “We saw how quickly X could be taken over by the deep fake pornography involving Taylor Swift.” “Too little, too late is often the case.”

Deepfakes can also cause confirmation bias in people, according to Adam Meyers, head of CrowdStrike’s counteradversary operations: “Even if they know in their heart that it’s not true, if it’s the message they want and something they want to believe in they’re not going to let that go.”

Chesterman added that people may begin to doubt the legitimacy of elections if they see fabricated footage depicting electoral malpractice, such as ballot stuffing.

Conversely, candidates might downplay any negative or unfavorable aspects of who they are and instead blame it on deepfakes, according to Soon.

Who should be responsible?

Given their quasi-public role, social media platforms are suddenly realizing the need to assume greater accountability, according to Chesterman.

Twenty of the biggest internet firms, including Facebook, Snap, TikTok, and X, Microsoft, Meta, Google, Amazon, and IBM, along with artificial intelligence startup OpenAI, stated in February that they will work together to stop the misleading use of AI in elections this year.

According to Soon, the tech pact that was reached is a significant first step, but its success will rely on how it is put into practice and upheld. A multifaceted strategy is required because internet companies are implementing disparate safeguards across their platforms, according to her.

According to Soon, tech companies will also need to be extremely open about the judgments they make and the procedures they implement.

Chesterman, however, asserted that it is likewise irrational to expect private enterprises to do tasks that are fundamentally public in nature. Companies may take months to select what content to permit on social media since it’s a difficult decision, he said.

Chesterman continued, “We shouldn’t be depending solely on these companies’ good intentions.” “Regulations and expectations for these companies need to be set because of this.”

In order to accomplish this, the non-profit Coalition for Content Provenance and Authenticity (C2PA) has introduced digital credentials for content. These credentials will display to viewers verified information about the content, including the identity of the creator, the location and time of creation, and whether or not generative AI was used in the process.

Companies including Adobe, Microsoft, Google, and Intel are members of C2PA.

OpenAI has declared that it will apply C2PA content credentials to photographs produced in the first part of this year using its DALL·E 3 product.

Sam Altman, the founder and CEO of OpenAI, stated in a January interview with Bloomberg House that the business was “quite focused” on making sure its technology wasn’t being used to rig elections. The conversation took place at the World Economic Forum.

He stated, “I think our role is very different than the role of a distribution platform,” such as a news publisher or social networking website. It’s like you generate here and you distribute here because we have to collaborate with them. Additionally, they must have a fruitful dialogue with one another.

Meyers proposed establishing a non-profit, bipartisan technical organization whose only task would be to detect and evaluate deepfakes.

Then, he said, “the public can send them content they suspect is manipulated.” “Although it’s not perfect, people can at least rely on some kind of mechanism.”

However, Chesterman noted that although technology plays a significant role in the solution, customers still need to be ready for a lot of the changes.

Soon emphasized the significance of public education as well.

“In order to increase the public’s awareness and vigilance when they come across information, we need to keep up our outreach and engagement efforts,” she stated.

The public must exercise greater caution; in addition to verifying information when it seems extremely suspect, users should confirm important details, particularly before forwarding them to others, she said.

“Everyone can contribute in some way,” Soon remarked. “Everyone is on deck.”

— Ryan Browne and MacKenzie Sigalos of CNBC contributed to this article.